Categories

Description

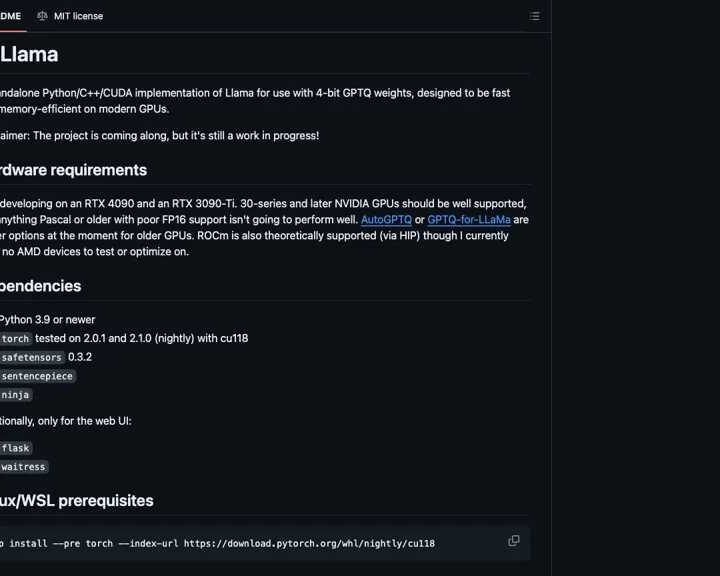

Exllama: Memory-Optimized LLaMA Implementation for High-Performance NLP on GPUs

Exllama is a cutting-edge, memory-efficient implementation designed to execute Hugging Face transformers, specifically leveraging LLaMA models with quantized weights. This innovative approach enables the deployment of high-performance natural language processing (NLP) tasks on modern graphics processing units (GPUs), significantly minimizing memory requirements and accommodating a broader range of hardware configurations, including NVIDIA’s RTX series. Discover how Exllama can revolutionize your AI projects.

Key Features and Benefits of Exllama

- Memory-Optimized LLaMA Execution: Run large language models (LLMs) like LLaMA with quantized weights, drastically reducing GPU memory consumption.

- Hugging Face Transformer Compatibility: Seamlessly integrate with the Hugging Face ecosystem for transformer models.

- Sharded Model Support: Efficiently manage and utilize sharded models for enhanced scalability and resource utilization in NLP tasks.

- Configurable Processor Affinity: Fine-tune performance by optimizing processor affinity for diverse hardware setups, ensuring maximum efficiency.

- Flexible Stop Conditions: Gain control over text generation by defining custom stop conditions for more precise AI outputs.

- High-Performance NLP: Achieve superior performance in complex NLP applications without the need for high-end, memory-intensive hardware.

Exllama Use Cases and Applications

- Efficient AI Model Deployment: Deploy robust NLP applications powered by LLaMA on modern GPUs, even those with limited VRAM, thanks to its memory-saving design.

- AI Research & Experimentation: Researchers can experiment with advanced LLaMA model configurations and quantized weights for superior results with reduced resource usage.

- Resource-Constrained Environments: Enable AI development and deployment in environments where high memory GPUs are not available or cost-prohibitive.

- Custom Chatbot Development: Build and optimize custom chatbots and AI assistants leveraging Exllama’s efficient inference capabilities.

- Accelerated Text Generation: Speed up text generation for creative writing, code completion, and other generative AI tasks.

Target Audience for Exllama

- AI Developers: Seeking efficient solutions for deploying high-performance NLP and LLM applications.

- Machine Learning Engineers: Focused on optimizing model performance and resource utilization on GPUs.

- AI Enthusiasts & Hobbyists: Interested in exploring and experimenting with advanced large language models like LLaMA on consumer-grade hardware.

- Researchers: Working on NLP advancements who need efficient model execution.

Exllama Tool Ratings

- Accuracy and Reliability: 4.4/5

- Ease of Use: 4.2/5

- Functionality and Features: 3.7/5

- Performance and Speed: 4.4/5

- Customization and Flexibility: 3.5/5

- Data Privacy and Security: 4/5

- Support and Resources: 3.8/5

- Cost-Efficiency: 3.6/5

- Integration Capabilities: 3.7/5

- Overall Score: 3.92/5

By providing a highly memory-efficient and performance-optimized implementation of LLaMA, Exllama empowers developers and researchers to harness the power of large language models without the traditional barriers of extensive hardware requirements. Explore Exllama on Proaitools for your next AI innovation.